During a troubleshooting session in Component Pack, I checked the Kubernetes events.

kubectl get events -n connections

18m Warning FailedGetScale horizontalpodautoscaler/middleware-jsonapi no matches for kind "Deployment" in group "extensions"

18m Warning FailedGetScale horizontalpodautoscaler/mwautoscaler no matches for kind "Deployment" in group "extensions"

18m Warning FailedGetScale horizontalpodautoscaler/te-creation-wizard no matches for kind "Deployment" in group "extensions"

18m Warning FailedGetScale horizontalpodautoscaler/teams-share-service no matches for kind "Deployment" in group "extensions"

18m Warning FailedGetScale horizontalpodautoscaler/teams-share-ui no matches for kind "Deployment" in group "extensions"

18m Warning FailedGetScale horizontalpodautoscaler/teams-tab-api no matches for kind "Deployment" in group "extensions"

18m Warning FailedGetScale horizontalpodautoscaler/teams-tab-ui no matches for kind "Deployment" in group "extensions"

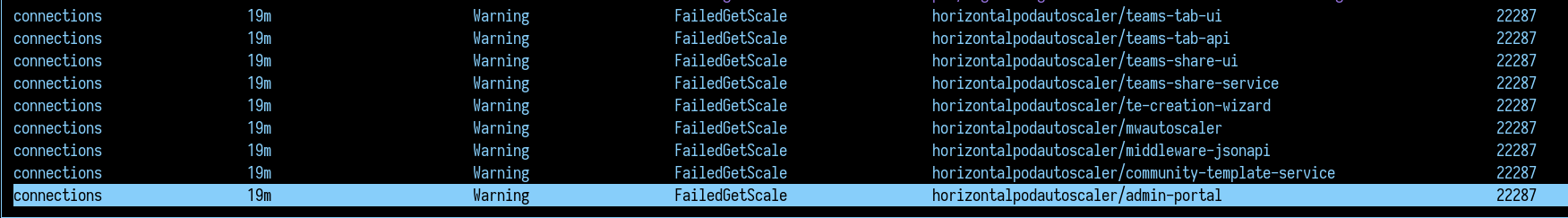

Or in k9s:

So, there are several thousand messages of a failed autoscaler. The documentation does not mention HPA anywhere. So, I checked the Kubernetes documentation: HorizontalPodAutoscaler Walkthrough

One prerequisite to using HPA (HorizontalAutoscaler), is the installation of Metrics Server on the Kubernetes cluster.

Install Metrics Server

https://github.com/kubernetes-sigs/metrics-server#deployment

Install with kubectl

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability-1.21+.yaml

Install with helm

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/

helm upgrade --install metrics-server metrics-server/metrics-server

Fix apiVersion

Even after the Metrics server is installed, the events still show errors. Therefore, let's check:

kubectl describe hpa teams-tab-ui -n connections

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedGetScale 27m (x22287 over 3d21h) horizontal-pod-autoscaler no matches for kind "Deployment" in group "extensions"

Searching the error message and found: Horizontal Pod Autoscaling failing after upgrading to Google Kubernetes Engine 1.16 with error: no matches for kind "Deployment" in group "extensions"

Since Kubernetes 1.16 the HPA configuration needs to be changed from:

...

scaleTargetRef:

apiVersion: extensions/v1beta

kind: Deployment

name: admin-portal

...

to

...

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: admin-portal

...

Fix customizer HPA

Now most of the HPA are start working, except of the mwautoscaler. Here, the deployment name in scaleTargetRef is wrong and needs to be changed from mwautoscaler to mw-proxy. To adjust the minimum pod count, which is set to 1 in all other HPA, I changed the default 3 to 1 here.

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

annotations:

meta.helm.sh/release-name: mw-proxy

meta.helm.sh/release-namespace: connections

creationTimestamp: "2023-02-08T15:51:28Z"

labels:

app.kubernetes.io/managed-by: Helm

chart: mw-proxy-0.1.0-20230329-171529

environment: ""

heritage: Helm

name: fsautoscaler

release: mw-proxy

type: autoscaler

name: mwautoscaler

namespace: connections

resourceVersion: "2105787"

uid: 1bf749b4-f4cd-4760-a2e0-357ff0e6772a

spec:

maxReplicas: 3

metrics:

- resource:

name: cpu

target:

averageUtilization: 80

type: Utilization

type: Resource

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: mw-proxy

status:

conditions:

- lastTransitionTime: "2023-05-30T10:41:57Z"

message: recommended size matches current size

reason: ReadyForNewScale

status: "True"

type: AbleToScale

- lastTransitionTime: "2023-05-30T10:41:57Z"

message: the HPA was able to successfully calculate a replica count from cpu resource

utilization (percentage of request)

reason: ValidMetricFound

status: "True"

type: ScalingActive

- lastTransitionTime: "2023-05-30T10:41:57Z"

message: the desired count is within the acceptable range

reason: DesiredWithinRange

status: "False"

type: ScalingLimited

currentMetrics:

- resource:

current:

averageUtilization: 10

averageValue: 5m

name: cpu

type: Resource

currentReplicas: 1

desiredReplicas: 1

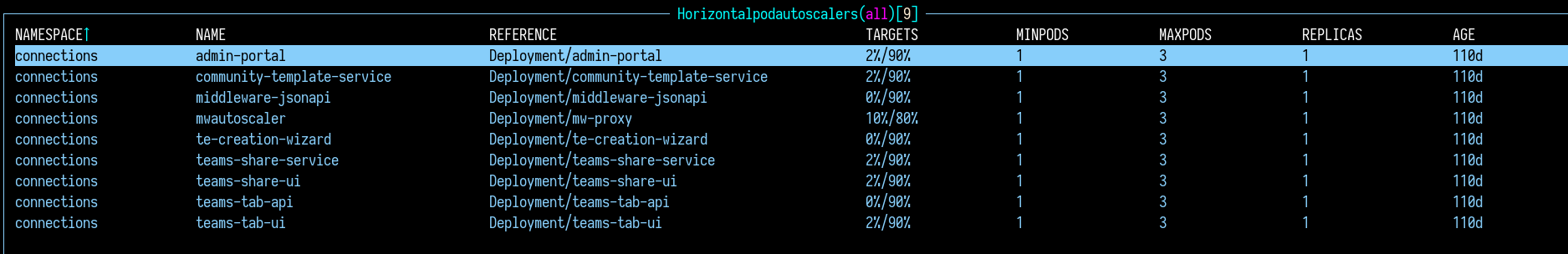

Working HPA

With these changes HPA starts working:

Interesting to see that the new introduced pod middleware-jsonapi has an HPA configuration, but uses the same old apiVersion as the other ones.